Research and Evaluation Instruments

Over the years, I have designed, developed, and validated a few research instruments on a wide range of constructs. I provide a few of these instruments for research and evaluation purposes. All instruments are freely available to use for research and evaluation purposes provided proper attribution is provided in any published works. I also provide citations to the articles that utilize these instruments. Please contact me if you have any questions or concerns.

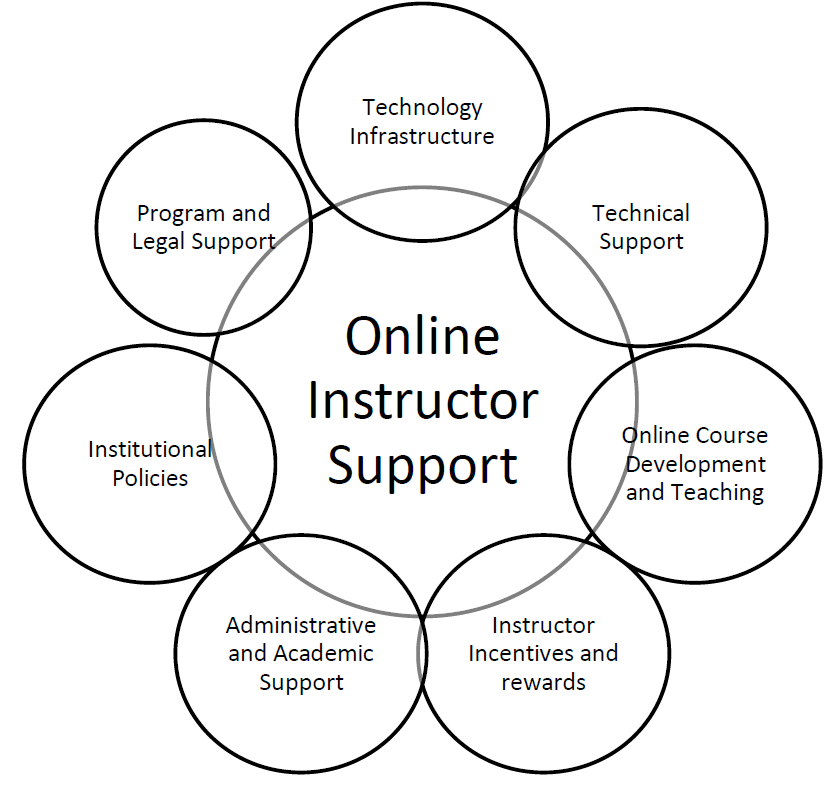

Online Instructor Support Survey (OISS)

The Online Instructor Support Survey (OISS) is based on seven areas identified in the research literature related to support for online instructors: (a) technology infrastructure; (b) technical support; (c) online course development and teaching; (d) instructor rewards and incentives; (e) administrative and academic support; (f) institutional policies and culture; and (g) program and legal support. The OISS uses a modified 5-point Likert scale ranging from “not at all” to “to a very great extent” (1 = Not at all, 2 = to a small extent, 3 = to some extent, 4 = to a great extent, 5 = to a very great extent). We collected data from N = 275 online instructors and performed an EFA to examine the results and provide preliminary validity evidence for the scale. The OISS was found be explain 67% of the variability in the data with the seven noted factors with internally consistent structures.

References

Kumar, S., Ritzhaupt, A. D., & Pedro, N. S. (2022). Development and validation of the Online Instructor Support Survey (OISS). Online Learning, 26(1), 221-244.

Download Instrument: OISS in MS Word Format

Abbreviated Technology Anxiety Scale (ATAS)

We developed the Abbreviated Technology Anxiety Scale (ATAS) and applied measurement theory to provide validity and reliability evidence. We implemented the study in multiple phases that included expert panel reviews on the content and quality of the items, and three rounds of data collection and analyses to provide the needed evidence. The scores from the ATAS were found to have an internally consistent structure, as well as correlate with other known measures of technology and anxiety. The initial scale had 25-items, and the final scale has 11-items measuring one construct. The ATAS uses a modified 5-point Likert scale format (5 = Strongly Agree; 4 = Agree; 3 = Neither agree, nor disagree; 2 = Disagree; 1 = Strongly Disagree).

References

Wilson, M., Huggins, A. C., Ritzhaupt, A. D., & Ruggles, K. (In press). Development of the Abbreviated Technology Anxiety Scale (ATAS). Behavior Research Methods.

Download Instrument: ATAS in MS Word Format

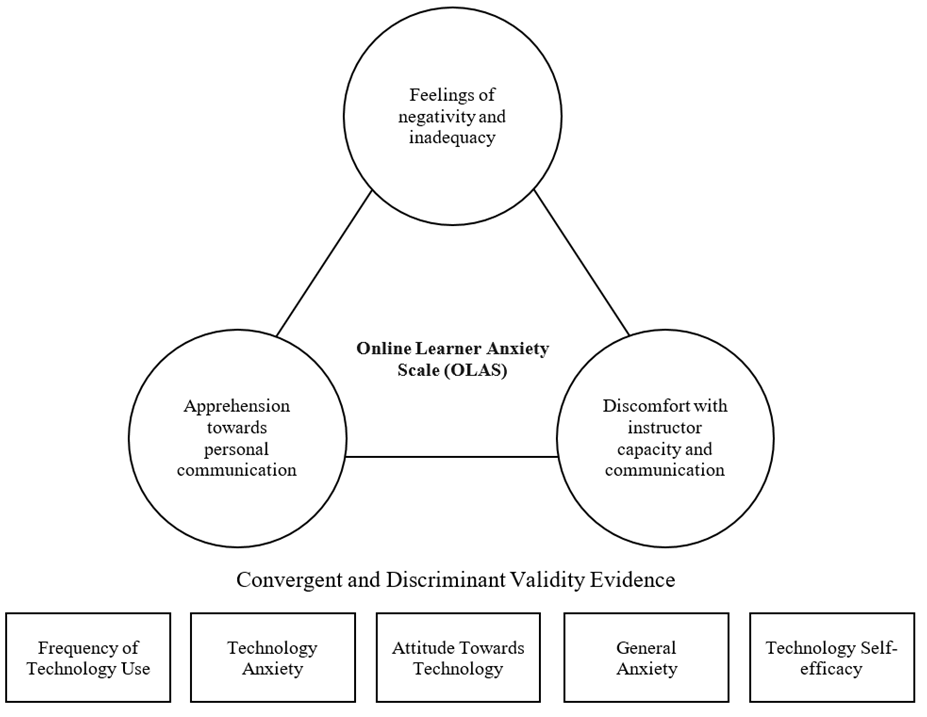

Online Learner Anxiety Scale (OLAS)

The Online Learner Anxiety Scale (OLAS) was inspired by existing research and theories in online learning. We define online learning anxiety as: “The feelings of fearfulness, apprehension, and uneasiness that a learner experiences in an online learning environment, while interacting with content, instructor, and/or fellow students.” The OLAS has only been administered once on a population of N = 297 undergraduate students. The OLAS has 24-items using a modified 5-point Likert scale format (5 = Strongly Agree; 4 = Agree; 3 = Neither agree, nor disagree; 2 = Disagree; 1 = Strongly Disagree). Using EFA on these data, we arrive at three internally consistent factors associated with online learner anxiety: 1) Online learner feelings of negativity and inadequacy, 2) Online learner apprehension towards personal communication, and 3) Online learner discomfort with instructor capacity and communication. To provide additional validity evidence, the OLAS scores were correlated with five other factors related to technology and anxiety.

References

Ritzhaupt, A. D., Rehman, M. S., Wilson, M., & Ruggles, K. (In press). Exploring the factors associated with undergraduate students’ online learning anxiety: Development of the Online Learner Anxiety Scale (OLAS). Online Learning.

Download Instrument: OLAS in MS Word Format

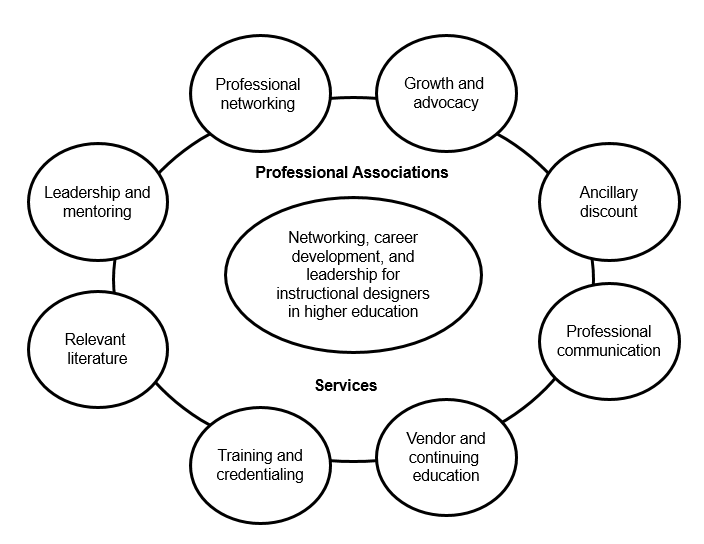

Instructional Designer in Higher Education Professional Association Survey (IDHEPAS)

The IDHEPAS is based on a conceptual framework connecting professional association services to the existing research on the leadership, career development, and networking of instructional designers in higher education. The IDHEPAS has 44-items using a modified 5-point Likert scale format (5 = Strongly Agree; 4 = Agree; 3 = Neither agree, nor disagree; 2 = Disagree; 1 = Strongly Disagree). The analyses resulted in eight internally consistent factors explaining approximately 71% of the variability in these data: 1) Professional networking services, 2) Growth and advocacy services, 3) Professional communication services, 4) Ancillary discount services, 5) Leadership and mentoring services, 6) Relevant literature services, 7) Training and credentialing services, and 8) Vendor and continuing education services.

References

Ritzhaupt, A. D., Stefaniak, J. E., Budhrani, K. S., & Conklin, S. L. (2020). A study on the services motivating instructional designers in higher education to engage in professional associations: Implications for research and practice. Journal of Applied Instructional Design, 9(2).

Download Instrument: IDHEPAS in MS Word Format

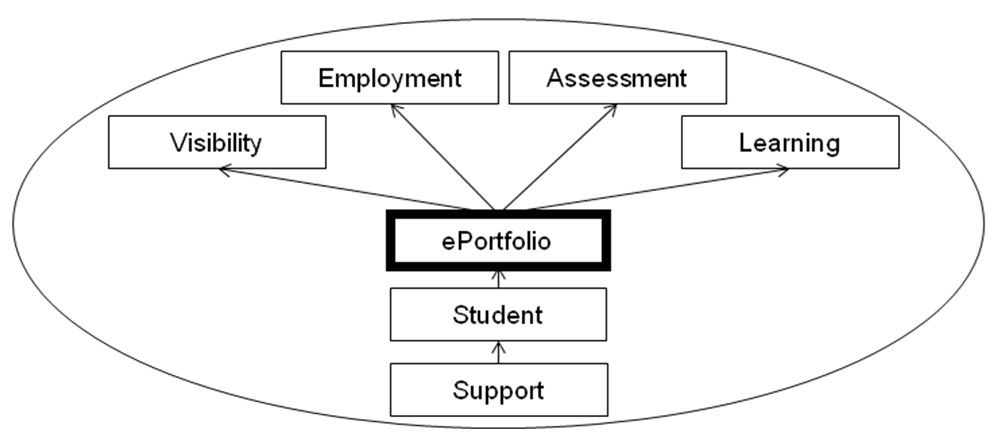

Electronic Portfolio Student Perspective Instrument (EPSPI)

The Electronic Portfolio Student Perspective Instrument (EPSPI) is designed to measure student perspectives about ePortfolios related to five different factors: visibility, employment, assessment, learning, and support. The EPSPI is based on a conceptual framework which is visualized in the figure below. The original EPSPI contains 43 unique items and is presented in a modified Likert scale (Strongly Disagree; Disagree; Neither Agree, Nor Disagree; Agree; and Strongly Agree). After the exploratory factor analysis, the visibility factor consumed the employment items, and all negatively stated items were removed from the scale. The current form of the EPSPI is based on a series of studies leading to the design, development, and ultimately, validation of the survey tool.

References

Ritzhaupt, A. D., Ndoye, A., & Parker, M. A. (2010). Validation of the Electronic Portfolio Student Perspective Instrument (EPSPI): Conditions under a different integration initiative. Journal of Computing in Teacher Education, 26(3), 111–119.

Ritzhaupt, A. D., Singh, O., Seyferth, T., & Dedrick, R. F. (2008). Development of the Electronic Portfolio Student Perspective Instrument: An ePortfolio integration initiative. Journal of Computing in Higher Education, 19(2), 47–71.

Ritzhaupt, A. D., & Singh, O. (2006). Student perspectives of ePortfolios in computing education. In R. Menezes (Ed.), Proceedings of the Association of Computing Machinery Southeast Conference (pp. 152–157). Melbourne, Florida: ACM.

Ritzhaupt, A. D., & Singh, O. (2006). Student perspectives of organizational uses of ePortfolios in higher education. In E. Pearson & P. Bohman (Eds.), Proceedings of ED-Media: World Conference on Educational Multimedia, Hypermedia, and Telecommunications (pp. 1717–1722). Orlando, FL: AACE.

Download Instrument: EPSPI in MS Word Format

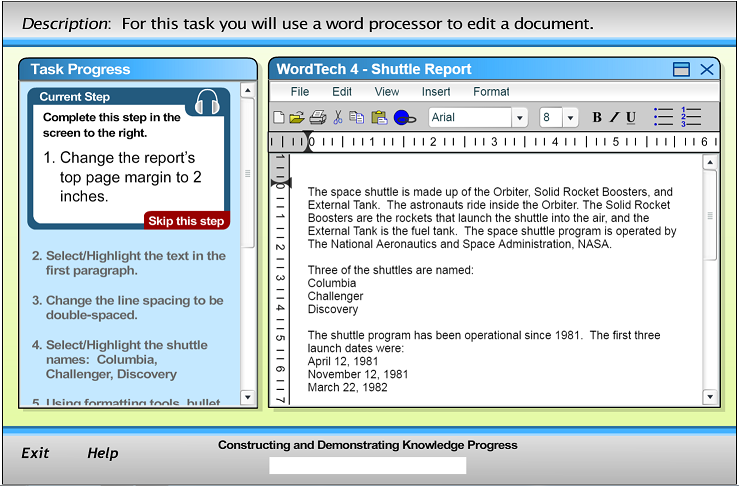

Student Tool for Technology Literacy (ST2L)

The Student Tool for Technology Literacy (ST2L) was designed to address the NCLB: Title II, Part D legislation that required states to imrpove students’ academic performance through technology, and ensure that all students reach technology literacy by the end of 8th grade. While many technology literacy measures have been created over the years, few adopted a performance-based approach in an interactrive envrionment that closely resembles common software tools. The ST2L was originally framed based on the original ISTE Standards for Students from the 1990s. However the tool was updated to align with the second generation of the ISTE Standards for Students. The ST2L has been shown to provide valid and reliable scores of technology literacy for the target population of middle school students. The tool has since been used in several research and evaluation programs and has been used by more than 100,000 students from across the state of Florida.

References

Huggins, A. C., Ritzhaupt, A. D., & Dawson, K. (2014). Measuring information and communication technology literacy using a performance assessment: Validation of the Student Tool for Technology Literacy (ST2L). Computers & Education, 77, 1–12.

Hohlfeld, T. N., Ritzhaupt, A. D., & Barron, A. E. (2013). Are gender differences in perceived and demonstrated technology literacy significant? It depends on the model. Educational Technology Research and Development, 61(4), 639–663.

Ritzhaupt, A. D., Liu, F., Dawson, K., & Barron, A. E. (2013). Differences in student information and communication technology literacy based on socio-economic status, ethnicity, and gender: Evidence of a Digital Divide in Florida schools. Journal of Research on Technology in Education, 45(4), 291–307.

Hohlfeld, T. N., Ritzhaupt, A. D., & Barron, A. E. (2010). Development and validation of the Student Tool for Technology Literacy (ST2L). Journal of Research on Technology in Education, 42(4), 361–389.

View Instrument: ST2L Indicators in MS Word Document

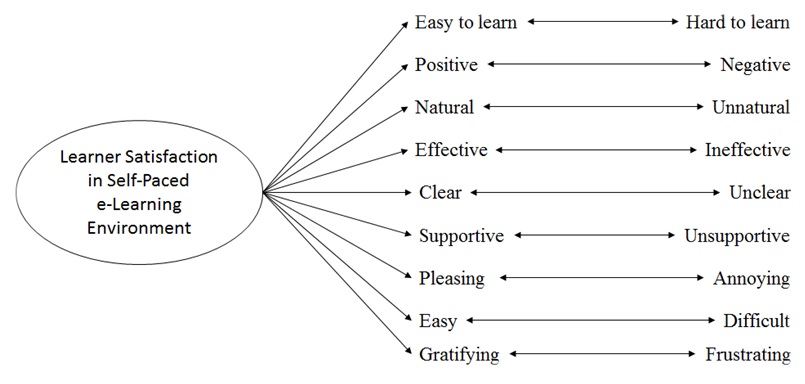

e-Learner Satisfaction Scale (eLSS)

The eLSS is designed to be a parsimonious, flexible, objective, valid, and reliable tool for reporting learner satisfaction scores with self-paced e-learning environments for either research or practice. The tool uses a 9-item semantic differential scale format to collect data from learners. The figure below visualizes the conceptual framework for the eLSS in relation to the nine items on the survey along with both the positive and negative bipolar adjectives to gauge a learner’s level of satisfaction with a wide range of self-paced e-learning solutions. The nine items are specific enough to account for the learner’s perception of the e-learning experiences, and general enough to apply to virtually any type of self-paced e-learning situations (e.g., eBooks, online instructional videos, etc.). The adjectives selected for the scale were intended to positively correlate with each other and to operationalize into a single unidimensional construct of learning satisfactions with self-paced e-learning environments.

References

Ritzhaupt, A. D. (2019). Measuring learner satisfaction in self-paced e-learning environments: Validation of the e-Learner Satisfaction Scale (eLSS). International Journal of E-Learning, 18(3), 279–299.

Download Instrument: eLSS in MS Word Format

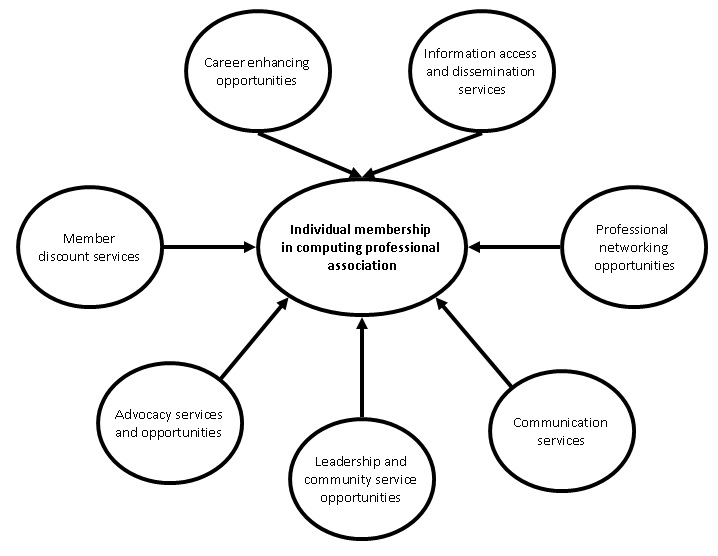

Ideal Computing Professional Association Survey (ICPAS)

The design, development, and initial validation of the Ideal Computing Professional Association Survey (ICPAS) was performed to provide the preliminary validity and reliability evidence of the survey on professionals from a computing professional association. Recognition of the need to develop a system for effectively measuring factors that influence professional association membership served as a driver for this research. The ICPAS was developed based on a conceptual framework shown in the figure below, a focus group of computing professionals, review panel of experts, and was subsequently deployed within the Association of Information Technology Professionals (AITP). The ICPAS provides a reliable measurement system for computing professional association leadership to make informed decisions and to benchmark professional needs and motivations. The final instrument has 52 items and uses the same modified Likert scale (Strongly Disagree; Disagree; Neither Agree, nor Disagree; Agree; Strongly Agree).

References

Ritzhaupt, A. D., Umapathy, K., & Jamba, L. (2008). Computing professional association membership: An exploration of membership needs and motivations. Journal of Information Systems Applied Research, 1(4), 1–23.

Ritzhaupt, A. D., Umapathy, K., & Jamba, L. (2012). A study on services motivating computing professional association membership. International Journal of Human Capital and Information Technology Professionals (IJHCITP), 3(1), 54–70.

Download Instrument: ICPAS in MS Word Format

Educational Technologist Competency Survey (ETCS)

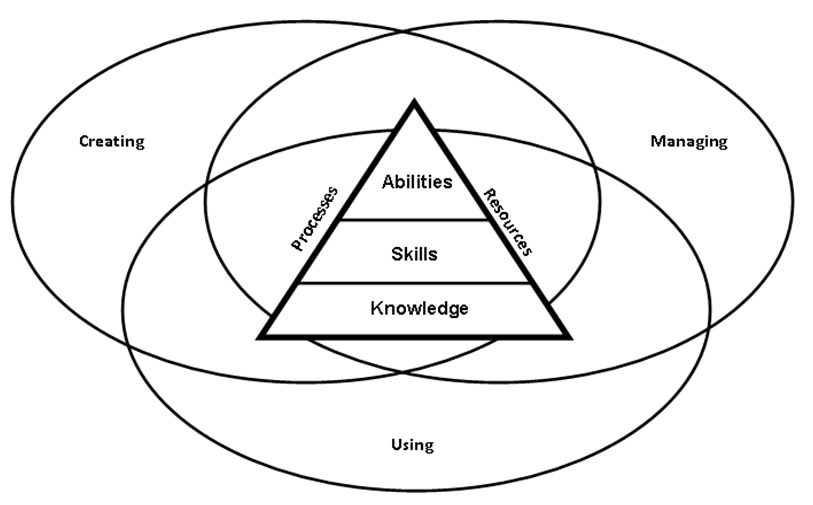

The Educational Technology Competency Survey (ETCS) designed, developed, and validated was based on a conceptual framework that emphases the definition of educational technology and associated knowledge, skill, and ability statements (KSAs). Using the conceptual framework shown in the figure below, the ETCS was developed by conducting an: 1) an extant review of relevant literature related to competencies of educational technology professionals, 2) job announcement analysis of 400 postings from five relevant databases, 3) extraction and merging of the KSA statements from the job announcements and relevant literature, 4) administration of the survey on a wide variety of educational technology professionals. One hundred seventy-six KSA competencies were derived from the process and organized into KSA statements. The instruments were assigned the following response scale for the KSA statements: Not important at all (1); Important to a small extent (2); to some extent (3); to a moderate extent (4); and to a great extent (5). This response scale was adopted to gauge the relative importance of a competency from an educational technology professional’s perspective. The instructions for participants read “Please indicate the importance of the following (knowledge/skill/ability) statements in creating, using, and managing learning resources and processes.”

References

Ritzhaupt, A. D., Martin, F., Pastore, R., & Kang, Y. (2018). Development and validation of the educational technologist competencies survey (ETCS): knowledge, skills, and abilities. Journal of Computing in Higher Education, 30(1), 3–33.

Kang, Y., & Ritzhaupt, A. D. (2015). A job announcement analysis of educational technology professional positions: Knowledge, skills, and abilities. Journal of Educational Technology Systems, 43(3), 231–256.

Ritzhaupt, A. D., & Martin, F. (2014). Development and validation of the educational technologist multimedia competency survey. Educational Technology Research and Development, 62(1), 13–33.

Ritzhaupt, A. D., Martin, F., & Daniels, K. (2010). Multimedia competencies for an educational technologist: A survey of professionals and job announcement analysis. Journal of Educational Multimedia and Hypermedia, 19(4), 421–449.

Download Instrument: ETCS in MS Word Format